Histogram-Based Gradient Boosting Regression Tree for Time Series Forecasting

Presenting and Coding a Machine Learning Model on Time Series

This article will discuss a machine learning model referred to as Histogram-Based Gradient Boosting Regressor. We will download a time series from an online source, transform it (i.e. make it stationary) and will simply apply the model’s tools to forecast t+1 values at each time step. We will just apply a simple performance evaluation tool, that is the accuracy (or hit ratio).

Intuition of the Model

Histogram-Based Gradient Boosting (HistogramGB or HistGB) is a high-performance variant of gradient boosting designed to scale efficiently to large datasets with both numerical and categorical features. Implemented in libraries such as scikit-learn and LightGBM, the HistGradientBoostingRegressor improves the computational and memory efficiency of traditional Gradient Boosted Decision Trees (GBDTs) by approximating feature distributions with histograms. This approximation allows for faster training without significant loss in predictive accuracy, making it well-suited for large-scale regression problems.

HistGradientBoostingRegressor builds upon the core ideas of gradient boosting—sequentially fitting regression trees to the negative gradients (residuals) of a loss function—but introduces key optimizations in how feature values are processed.

Instead of evaluating splits at every unique feature value, the histogram-based method discretizes continuous feature values into kkk bins (typically 256). Each feature's values are bucketed during a preprocessing phase, reducing the number of candidate split points and accelerating tree construction.

Let x∈Rp denote the input features. The binning process maps each feature dimension xj to a discrete index bj ∈ {1,…,k}. These bins are computed globally using quantiles or uniform width, depending on the implementation. Once binned, the model constructs histograms to efficiently compute gradients and hessians for potential split points.

This approximation yields significant performance gains in both speed and memory usage, especially for large datasets with many continuous features, while maintaining comparable predictive performance to exact-gradient methods.

The core steps of HistGradientBoostingRegressor are as follows:

Start with an initial prediction, typically the mean of the target variable.

Transform continuous features into integer bin indices.

At each iteration m, compute the negative gradients (residuals) of the loss function.

For each feature, aggregate gradient and hessian statistics into histograms based on the bin indices.

Identify the best split by evaluating gain metrics (e.g., reduction in squared error) using histogram statistics.

Fit a shallow regression tree to the residuals using the best splits.

Add the tree's output to the ensemble prediction, scaled by a learning rate ν.

The process is repeated for a fixed number of iterations or until convergence.

Do you want to master Deep Learning techniques tailored for time series, trading, and market analysis🔥? My book breaks it all down from basic machine learning to complex multi-period LSTM forecasting while going through concepts such as fractional differentiation and forecasting thresholds. Get your copy here 📖!

Using the Model to Forecast Time Series

Let’s use the model in Python to apply the returns of a time series. We’ll choose the returns of S&P 500 for this task, while knowing that it’s almost impossible to accurately predict such a chaotic dataset with simple models, but we will do it just to make the models work. The plan of attack is as follows:

Download the time series.

Take the returns of the prices to make it stationary (an important condition of machine learning forecasting).

Split the data into training and test sets.

Fit and predict.

Evaluate and plot the predictions.

Use the following code to conduct the experiment.

from sklearn.ensemble import HistGradientBoostingRegressor

import pandas_datareader as pdr

import numpy as np

import matplotlib.pyplot as plt

def data_preprocessing(data, num_lags, train_test_split):

# Prepare the data for training

x = []

y = []

for i in range(len(data) - num_lags):

x.append(data[i:i + num_lags])

y.append(data[i+ num_lags])

# Convert the data to numpy arrays

x = np.array(x)

y = np.array(y)

# Split the data into training and testing sets

split_index = int(train_test_split * len(x))

x_train = x[:split_index]

y_train = y[:split_index]

x_test = x[split_index:]

y_test = y[split_index:]

return x_train, y_train, x_test, y_test

start_date = '1960-01-01'

end_date = '2020-01-01'

# Import the data

data = (pdr.get_data_fred('SP500', start = start_date, end = end_date).dropna())

data = np.diff(data['SP500'])

# Train-test split

x_train, y_train, x_test, y_test = data_preprocessing(data, 100, 0.80)

# Create and train the model

model = HistGradientBoostingRegressor()

model.fit(x_train, y_train)

# Make predictions on the test set

y_pred = model.predict(x_test)

# Evaluate the model

same_sign_count = np.sum(np.sign(y_pred) == np.sign(y_test)) / len(y_test) * 100

print('Hit Ratio = ', same_sign_count, '%')

# Plot the actual vs. predicted values

plt.figure(figsize=(12, 6))

plt.plot(y_test[-50:], label = 'Actual', color = 'blue')

plt.plot(y_pred[-50:], label = 'Predicted', color = 'red')

plt.legend()

plt.title('Actual vs. Predicted')

plt.ylabel('Value')

plt.show()

plt.grid()

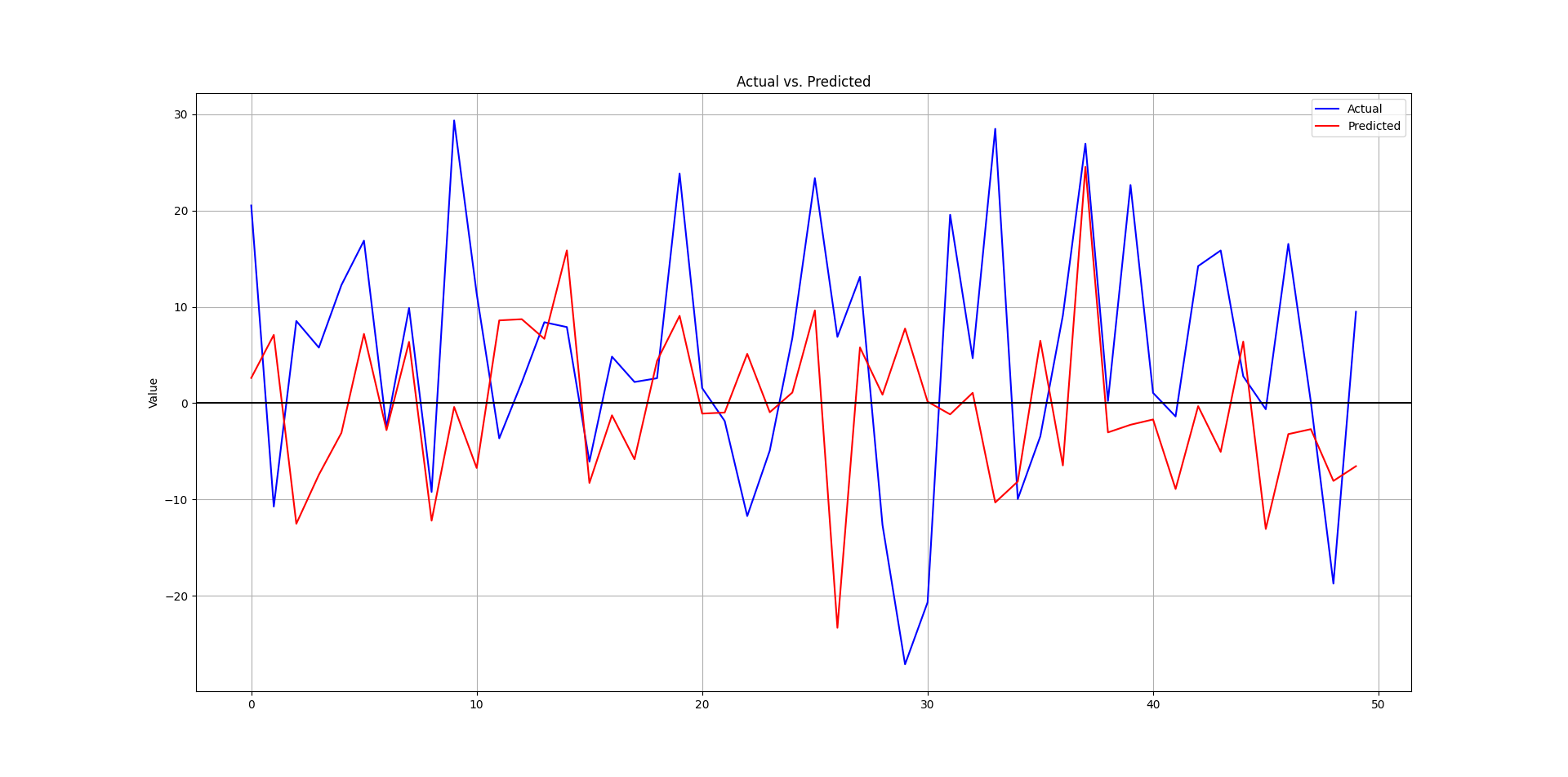

plt.axhline(y = 0, color = 'black')The following is the plot that compares the real data from the test set and the predicted data.

The following output shows the hit ratio.

48.05%As with any technique out there, the simple versions (default) are just building blocks from where you will improve on them.

Every week, I analyze positioning, sentiment, and market structure. Curious what hedge funds, retail, and smart money are doing each week? Then join hundreds of readers here in the Weekly Market Sentiment Report 📜 and stay ahead of the game through chart forecasts, sentiment analysis, volatility diagnosis, and seasonality charts.

Free trial available🆓