Exotic Trading Strategies on the S&P500 in Python

Creating & Coding Sentiment Strategies for Equity Indices Trading in Python

Equity trading strategies benefit from abundancy as they can be seen as the benchmark of trading, even before FX and commodities. Volume-based strategies tend to work well in centralized markets and many third-party providers have proprietary indicators designed specifically to aid in trading indices which gives us a lot of opportunities. This article sums up the sentiment strategies we have been talking about in previous articles.

I have just published a new book after the success of New Technical Indicators in Python. It features a more complete description and addition of complex trading strategies with a Github page dedicated to the continuously updated code. If you feel that this interests you, feel free to visit the below link, or if you prefer to buy the PDF version, you could contact me on Linkedin.

The Book of Trading Strategies

Amazon.com: The Book of Trading Strategies: 9798532885707: Kaabar, Sofien: Bookswww.amazon.com

Strategy #1: The Put-Call Ratio Strategy

Sentiment Analysis is a type of predictive analytics that deal with alternative data other than the basic OHLC price structure. It is usually based on subjective polls and scores but can also be based on more quantitative measures such as expected hedges through market strength or weakness.

A call option is the right to buy a certain asset in the future at a pre-determined price while a put option is the right to sell a certain asset in the future at a pre-determined price.

Hence, when you buy a call you get to buy something later and when you buy a put you get to sell something later. Every transaction has two sides, therefore, when you’re buying a call or a put, someone else is selling them to you. This brings two other positions that can be taken on options, selling calls and selling puts. The Put-Call indicator deals with the buyers of options and measures the number of put buyers divided by the number of call buyers. That gives us an idea on the sentiment of market participants around the specified equity (in our case it will be the US stock market).

A higher put/call ratio means that there are more put buyers (traders are betting on the asset going lower) and a lower put/call ratio signifies more call buyers (traders are betting on the rise of the asset). A known way of using this ratio in analyzing market sentiment is by evaluating the following scenarios:

A rising ratio signifies a bearish sentiment. Professionals “feel” that the market will go lower.

A falling ratio signifies a bullish sentiment. Professionals “feel” that the market will go up.

There are two types of ratios, the total Put-Call Ratio and the equity Put-Call Ratio. In this study we will stick to the equity one as it better reflects the speculative crowd.

Unfortunately, the up-to-date data of the Put-Call Ratio is no longer published for free on the CBOE website for some reason. Therefore, we need to find other ways to download the data among them multiple sources and creating free trials to be able to get the data. I have managed to come up with the latest 1000 data with the S&P500’s values in parallel as of August 2021. If you wish for more information, you may have to pay third party data providers. Anyway, in this GitHub link, you can get some historical data which I have legally obtained.

GitHub - sofienkaabar/sofienkaabar: Config files for my GitHub profile.

Config files for my GitHub profile. Contribute to sofienkaabar/sofienkaabar development by creating an account on…github.com

The first part is to download the EQPCR.xlsx data to your computer and then go into the Python interpreter and switch the source to where the excel file is located. Now, we have to import it and structure it.

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np# Defining Primordial Functions

def adder(Data, times):

for i in range(1, times + 1):

new = np.zeros((len(Data), 1), dtype = float)

Data = np.append(Data, new, axis = 1) return Datadef deleter(Data, index, times):

for i in range(1, times + 1):

Data = np.delete(Data, index, axis = 1) return Data

def jump(Data, jump):

Data = Data[jump:, ]

return Datadef indicator_plot_double(Data, first_panel, second_panel, window = 250): fig, ax = plt.subplots(2, figsize = (10, 5))

ax[0].plot(Data[-window:, first_panel], color = 'black', linewidth = 1)

ax[0].grid()

ax[1].plot(Data[-window:, second_panel], color = 'brown', linewidth = 1)

ax[1].grid()

ax[1].legend()my_data = pd.read_excel('EQPCR.xlsx')

my_data = np.array(my_data)Now, we should have an array called my_data with both the S&P500’s and the Put-Call Ratio’s historical data.

Subjectively, we can place barriers at 0.80 and 0.35 as they look to bound the ratio. These will be our trading triggers. Surely, some hindsight bias exists here but the idea is not to formulate a past trading strategy, rather see if the barriers will continue working in the future.

Following the intuition, we can have the following trading rules:

Buy (Long) whenever the ratio reaches 0.80 or surpasses it.

Sell (Short) whenever the ratio reaches 0.35 or breaks it.

def signal(Data, pcr, buy, sell):

Data = adder(Data, 2)

for i in range(len(Data)):

if Data[i, pcr] >= 0.85 and Data[i - 1, buy] == 0 and Data[i - 2, buy] == 0 and Data[i - 3, buy] == 0:

Data[i, buy] = 1 elif Data[i, pcr] <= 0.35 and Data[i - 1, sell] == 0 and Data[i - 2, sell] == 0 and Data[i - 3, sell] == 0:

Data[i, sell] = -1

return Datamy_data = signal(my_data, 0, 2, 3)def signal_chart_ohlc_color(Data, what_bull, window = 1000):

Plottable = Data[-window:, ]

fig, ax = plt.subplots(figsize = (10, 5))

plt.plot(Data[-window:, 1], color = 'black', linewidth = 1) for i in range(len(Plottable)):

if Plottable[i, 2] == 1:

x = i

y = Plottable[i, 1]

ax.annotate(' ', xy = (x, y),

arrowprops = dict(width = 9, headlength = 11, headwidth = 11, facecolor = 'green', color = 'green')) elif Plottable[i, 3] == -1:

x = i

y = Plottable[i, 1]

ax.annotate(' ', xy = (x, y),

arrowprops = dict(width = 9, headlength = -11, headwidth = -11, facecolor = 'red', color = 'red'))

ax.set_facecolor((0.95, 0.95, 0.95))

plt.legend()

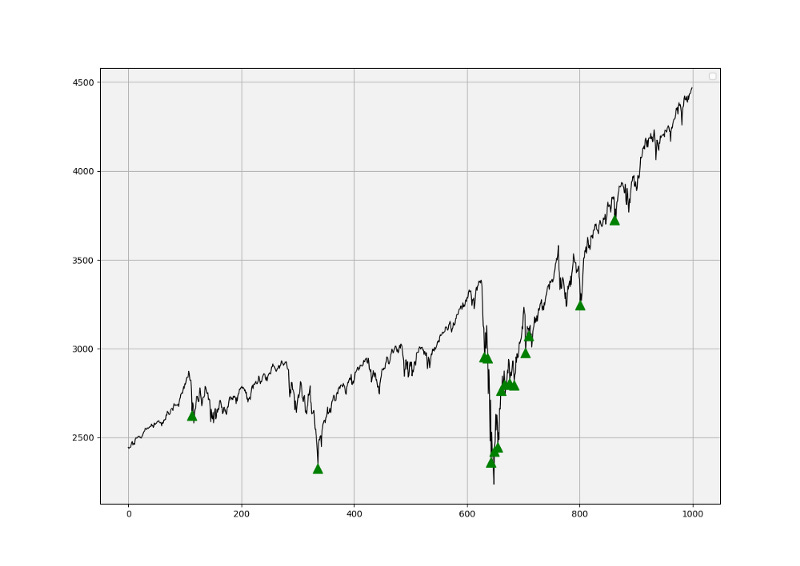

plt.grid()signal_chart_ohlc_color(my_data, 1, window = 1000)The code above gives us the signals shown in the chart. As a buying-the-dips timing indicator, the Put-Call Ratio may require some tweaking to improve the signals. Let us evaluate this using one metric for simplicity, the signal quality.

The signal quality is a metric that resembles a fixed holding period strategy. It is simply the reaction of the market after a specified time period following the signal. Generally, when trading, we tend to use a variable period where we open the positions and close out when we get a signal on the other direction or when we get stopped out (either positively or negatively). Sometimes, we close out at random time periods. Therefore, the signal quality is a very simple measure that assumes a fixed holding period and then checks the market level at that time point to compare it with the entry level. In other words, it measures market timing by checking the reaction of the market.

# Choosing an example of 21 periods

period = 21def signal_quality(Data, closing, buy, sell, period, where):

Data = adder(Data, 1)

for i in range(len(Data)):

try:

if Data[i, buy] == 1:

Data[i + period, where] = Data[i + period, closing] - Data[i, closing]

if Data[i, sell] == -1:

Data[i + period, where] = Data[i, closing] - Data[i + period, closing]

except IndexError:

pass

return Data# Using 21 Periods as a Window of signal Quality Check

my_data = signal_quality(my_data, 1, 2, 3, period, 4)positives = my_data[my_data[:, 4] > 0]

negatives = my_data[my_data[:, 4] < 0]# Calculating Signal Quality

signal_quality = len(positives) / (len(negatives) + len(positives))print('Signal Quality = ', round(signal_quality * 100, 2), '%')Strategy #2: The VIX

It should be apparent now that volatility is extremely important in the financial markets and being able to predict it will greatly improve trading skills, let alone enabling the trader to use pure volatility-betting strategies (i.e. straddles and variance swaps).

A derivative is an instrument or contract that derives its value from an underlying asset, rate, or anything that has value (even weather and electricity can have derivative contracts). Derivatives are one of the main innovations in finance and have played lead roles in many historical events.

Options are rights and not obligations to buy or sell an asset in the future at a fixed price called strike. However, they are not free (an upfront payment is required as opposed to forwards and futures). This right comes at a cost that is paid by the buyer of the option to the seller (writer) on the inception date. We call it the option’s premium. The main key input in options is volatility. If the volatility of these options is going up, and we know that volatility is generally negatively correlated with the asset’s returns, then this signals fear in the market.

We also know that there is historical volatility (fluctuations of the asset’s returns of the previous observations) which is calculated through standard deviation and there is what we call implied volatility that is not actually observed but inferred from market prices of options. It is generally a forward-looking measure and is more useful at predicting future volatility. Basic charting will allow us to see the negative correlation between the VIX and the S&P500 index. It almost mirrors it.

As we have previously mentioned, implied volatility is inferred from options’ market prices and it is not calculated through a formula. Hence, if we know all of the other inputs (including the price of the option) then we can measure the implied volatility by plugging into the Black-Scholes equation. To conclude on the question of what is the VIX?

The CBOE’s Volatility Index is a continuously updated (real time) index that shows the market’s expectations for the strength of short-term changes of the S&P500. Since it is calculated from near-term options on the S&P500, it can be considered as a 30-day projection of future volatility. Rarely do we see leading time series in finance and the VIX is one of them.

Let us download a sample of both historical data of the VIX and the S&P500 since 2017. I have spared you the grunt work and compiled both into an excel file downloadable from my GitHub below. This way we can import the data directly into the Python interpreter and analyze it.

GitHub - sofienkaabar/sofienkaabar: Config files for my GitHub profile.

Config files for my GitHub profile. Contribute to sofienkaabar/sofienkaabar development by creating an account on…github.com

The first part is to download the VIX.xlsx data to your computer and then go into the Python interpreter and switch the source to where the excel file is located. Now, we have to import it and structure it.

my_data = pd.read_excel('VIX.xlsx')

my_data = np.array(my_data)Now, we should have an array called my_data with both the S&P500’s and the VIX’s historical data since 2017.

Subjectively, we can place a barrier at 35 we tend to see a resistance with the exception of the Covid-19 mania. These will be our trading triggers for buy signals. Surely, some hindsight bias exists here but the idea is not to formulate a past trading strategy, rather see if the barriers will continue working in the future. Following the intuition, we can have the following trading rules:

Buy (Long) whenever the VIX reaches 35 or surpasses it.

def signal(Data, vix, buy):

Data = adder(Data, 1)

for i in range(len(Data)):

if Data[i, vix] >= 35 and Data[i - 1, buy] == 0 and Data[i - 2, buy] == 0 and Data[i - 3, buy] == 0 and Data[i - 4, buy] == 0 and Data[i - 5, buy] == 0:

Data[i, buy] = 1

return DataAs a buying-the-dips indicator, the VIX may require some tweaking to improve the signals. Let us evaluate this using one metric for simplicity, the signal quality.

# Choosing an example of 21 periods

period = 21# Using 21 Periods as a Window of signal Quality Check

my_data = signal_quality(my_data, 1, 2, period, 3)positives = my_data[my_data[:, 3] > 0]

negatives = my_data[my_data[:, 3] < 0]# Calculating Signal Quality

signal_quality = len(positives) / (len(negatives) + len(positives))print('Signal Quality = ', round(signal_quality * 100, 2), '%')

# Output: Signal Quality = 86.67%The Dark Index Strategy

The Dark Index provides a way of peeking into the secret (dark) exchanges. it is calculated as an aggregate value of many dark pool indicators (another type of release provided by squeezemetrics) and measures the hidden market sentiment. When the values of this indicator are higher than usual, it means that more buying occurred in dark pools than usual. We can profit from this informational gap. It is therefore a trail of liquidity providers (i.e. negatively correlated with the market it follows due to hedging activities).

We will use Python to download automatically the data from the website. We will be using a library called selenium, famous for fetching and downloading data online. The first thing we need to do is to define the necessary libraries.

# Importing Libraries

import pandas as pd

import numpy as np

from selenium import webdriverWe will assume that we will be using Google Chrome for this task, however, selenium supports other web browsers so if you are using another web browser, the below code will work but will need to change for the name of your browser, e.g. FireFox.

# Calling Chrome

driver = webdriver.Chrome('C:\user\your_file/chromedriver.exe')# URL of the Website from Where to Download the Data

url = "https://squeezemetrics.com/monitor/dix"

# Opening the website

driver.get(url)

# Getting the button by ID

button = driver.find_element_by_id("fileRequest")To understand what we are trying to do, we can think of this as an assistant that will open a Google Chrome window, type the address given, searches for the download button until it is found. The download will include the historical data of the S&P500 as well as the Dark Index and the GEX, an indicator that will be discussed just after. For now, the focus is on the Dark Index.

All that is left now is to simply click the download button which is done using the following code:

# Clicking on the button

button.click()You should see a completed download called DIX in the form of a csv excel file. It is time to import the historical data file to the Python interpreter and structure it the way we want it to be. Make sure the path of the interpreter is in the downloads section where the new DIX file is found.

Next, we can use the following syntax to organize and clean the data. Remember, we have a csv file composed of three columns, the S&P500, the Dark Index, and the GEX.

# Importing the Excel File Using pandas

my_data = pd.read_csv('DIX.csv')# Transforming the File to an Array

my_data = np.array(my_data)# Eliminating the time stamp

my_data = my_data[:, 1:3]Right about now, we should have a clean 2-column array with the S&P500 and the Dark Index. Let us write the conditions for the trade following the intuition we have seen in the DIX section:

A bullish signal is generated if the DIX reaches or surpasses 0.475, the historical resistance level while the previous three DIX readings are below 0.475 so that we eliminate duplicates.

No bearish signal is generated so that we harness the DIX’s power in an upward sloping market. The reason we are doing this is that we want to build a trend-following system. However, it may also be interesting to try and find bearish signals on the DIX. For simplicity, we want to see how well it times the market using only long signals.

# Creating the Signal Function

def signal(Data, dix, buy):

Data = adder(Data, 1)

for i in range(len(Data)):

if Data[i, dix] >= 0.475 and Data[i - 1, buy] == 0 and Data[i - 2, buy] == 0 and Data[i - 3, buy] == 0:

Data[i, buy] = 1

return Datamy_data = signal(my_data, 1, 2)The code above gives us the signals shown in the chat. We can see that they are of good quality and typically the false signals occur only on times of severe volatility and market-related issues. As a buying-the-dips timing indicator, the Dark Index may be promising. Let us evaluate this using one metric for simplicity, the signal quality.

# Using 21 Periods as a Window of signal Quality Check

my_data = signal_quality(my_data, 0, 2, 21, 3)positives = my_data[my_data[:, 3] > 0]

negatives = my_data[my_data[:, 3] < 0]# Calculating Signal Quality

signal_quality = len(positives) / (len(negatives) + len(positives))print('Signal Quality = ', round(signal_quality * 100, 2), '%')

# Output: 71.13 %The Gamma Exposure Index Strategy

The Gamma Exposure Index also known as the GEX, relates to the sensitivity of option contracts to changes in the underlying price. When imbalances occur, the effects of market makers’ hedges may cause price swings (such as short squeezes). The absolute value of the GEX index is simply the number of shares that will be bought or sold to push the price in the opposite direction of the trend when a 1% absolute move occurs. For example, if the price moves +1% with the GEX at 5.0 million, then, 5.0 million shares will come pouring in to push the market to the downside as a hedge.

As documented, the GEX acts as a brake on prices when it is high enough with a rising market and as an accelerator when it is low enough. The below graph shows the values of the S&P500 charted along the values of the Gamma Exposure Index.

We will use Python to download automatically the data from the website. We will be using a library called selenium, famous for fetching and downloading data online. The first thing we need to do is to define the necessary libraries.

# Importing Libraries

import pandas as pd

import numpy as np

from selenium import webdriverWe will assume that we will be using Google Chrome for this task, however, selenium supports other web browsers so if you are using another web browser, the below code will work but will need to change for the name of your browser, e.g. FireFox.

# Calling Chrome

driver = webdriver.Chrome('C:\user\your_file/chromedriver.exe')# URL of the Website from Where to Download the Data

url = "https://squeezemetrics.com/monitor/dix"

# Opening the website

driver.get(url)

# Getting the button by ID

button = driver.find_element_by_id("fileRequest")To understand what we are trying to do, we can think of this as an assistant that will open a Google Chrome window, type the address given, searches for the download button until it is found. The download will include the historical data of the S&P500 as well as the Gamma Exposure Index and the Dark Index. For now, the focus is on the GEX.

All that is left now is to simply click the download button which is done using the following code:

# Clicking on the button

button.click()You should see a completed download called DIX in the form of a csv excel file. It is time to import the historical data file to the Python interpreter and structure it the way we want it to be. Make sure the path of the interpreter is in the downloads section where the new DIX file is found.

Next, we can use the following syntax to organize and clean the data. Remember, we have a csv file composed of three columns, the S&P500, an unknown time series that we will discuss in a later article, and the GEX which we will use to time the market.

# Importing the Excel File Using pandas

my_data = pd.read_csv('DIX.csv')# Transforming the File to an Array

my_data = np.array(my_data)# Eliminating the time stamp

my_data = my_data[:, 1:4]# Deleting the Middle Column

my_data = deleter(my_data, 1, 1)Right about now, we should have a clean 2-column array with the S&P500 and the Gamma Exposure Index. Let us write the conditions for the trade following the intuition we have seen in the GEX section:

A bullish signal is generated if the GEX reaches or breaks the zero level while the previous three GEX readings are above zero so that we eliminate duplicates.

No bearish signal is generated so that we harness the GEX’s power in an upward sloping market. The reason we are doing this is that we want to build a trend-following system. However, it may also be interesting to try and find bearish signals on the GEX. For simplicity, we want to see how well it times the market using only long signals.

# Creating the Signal Function

def signal(Data, gex, buy):

Data = adder(Data, 1)

for i in range(len(Data)):

if Data[i, gex] <= 0 and Data[i - 1, gex] > 1 and Data[i - 2, gex] > 1 and Data[i - 3, gex] > 1:

Data[i, buy] = 1

return Datamy_data = signal(my_data, 1, 2)

The code above gives us the signals shown in the chat. We can see that they are of good quality and typically the false signals occur only on times of severe volatility and market-related issues. As a buying-the-dips timing indicator, the GEX may be promising. Let us evaluate this using one metric for simplicity, the signal quality.

# Using 21 Periods as a Window of signal Quality Check

my_data = signal_quality(my_data, 0, 2, 21, 3)positives = my_data[my_data[:, 3] > 0]

negatives = my_data[my_data[:, 3] < 0]# Calculating Signal Quality

signal_quality = len(positives) / (len(negatives) + len(positives))print('Signal Quality = ', round(signal_quality * 100, 2), '%')

# Output: 74.36 %The Composite Index Strategy

To create the Composite Index, we need to download the Dark Index and the Gamma Exposure Index first. Luckily, the indicators come with the values of the S&P500 in parallel so that they can be compared to each other.

We will use Python to download automatically the data from the website. We will be using a library called selenium, famous for fetching and downloading data online. The first thing we need to do is to define the necessary libraries.

# Importing Libraries

import pandas as pd

import numpy as np

from selenium import webdriverWe will assume that we will be using Google Chrome for this task, however, selenium supports other web browsers so if you are using another web browser, the below code will work but will need to change for the name of your browser, e.g. FireFox.

# Calling Chrome

driver = webdriver.Chrome('C:\user\your_file/chromedriver.exe')# URL of the Website from Where to Download the Data

url = "https://squeezemetrics.com/monitor/dix"

# Opening the website

driver.get(url)

# Getting the button by ID

button = driver.find_element_by_id("fileRequest")To understand what we are trying to do, we can think of this as an assistant that will open a Google Chrome window, type the address given, searches for the download button until it is found. The download will include the historical data of the S&P500 as well as the Gamma Exposure Index and the Dark Index.

All that is left now is to simply click the download button which is done using the following code:

# Clicking on the button

button.click()You should see a completed download called DIX in the form of a csv excel file. It is time to import the historical data file to the Python interpreter and structure it the way we want it to be. Make sure the path of the interpreter is in the downloads section where the new DIX file is found.

Next, we can use the following syntax to organize and clean the data. Remember, we have a csv file composed of three columns, the S&P500, the DIX, and the GEX.

# Importing the Excel File Using pandas

my_data = pd.read_csv('DIX.csv')# Transforming the File to an Array

my_data = np.array(my_data)# Eliminating the time stamp

my_data = my_data[:, 1:4]We now have three columns, the S&P500 historical closing values, the DIX, and the GEX. We want to code the following condition for the Composite Index so that it reflects both indicators:

Whenever we have a buy signal from both the GEX and the DIX at the same time, the composite signals a strong buy signal and a bottom in the equity market.

def signal(Data, dix, gex, composite):

Data = adder(Data, 2)

for i in range(len(Data)):

if Data[i, gex] <= 0:

Data[i, gex] = -1

else:

Data[i, gex] = 0

for i in range(len(Data)): if Data[i, dix] >= 0.475:

Data[i, dix] = -1

else:

Data[i, dix] = 0

for i in range(len(Data)): if Data[i, dix] == -1 and Data[i, gex] == -1:

Data[i, composite] = -1

return DataThe Composite Index will look unusual when compared to other indicators but is very easy to be interpreted. Whenever we have the values at -1 shown in brown vertical lines, we have a buy signal.

The code above gives us the signals shown in the chat. We can see that they are of good quality and typically there are no false signals when the holding period is optimized. As a buying-the-dips timing indicator, the Composite Index may outperform its two components when they are used individually. Let us evaluate this using one metric for simplicity, the signal quality.

# Using 21 Periods as a Window of signal Quality Check

my_data = signal_quality(my_data, 0, 3, 21, 4)positives = my_data[my_data[:, 4] > 0]

negatives = my_data[my_data[:, 4] < 0]# Calculating Signal Quality

signal_quality = len(positives) / (len(negatives) + len(positives))print('Signal Quality = ', round(signal_quality * 100, 2), '%')

# Output: 84.21 %A signal quality of 84.21% means that on 100 trades, we tend to see in 84 of the cases a higher price 21 periods after getting the signal. The signal frequency may need to be addressed as there were not much signals since 2011 (~ 19 trades) as the conditions are strict. Of course, perfection is the rarest word in finance and this technique can sometimes give false signals as any strategy can.

Conclusion

Remember to always do your back-tests. You should always believe that other people are wrong. My indicators and style of trading may work for me but maybe not for you.

I am a firm believer of not spoon-feeding. I have learnt by doing and not by copying. You should get the idea, the function, the intuition, the conditions of the strategy, and then elaborate (an even better) one yourself so that you back-test and improve it before deciding to take it live or to eliminate it.

To sum up, are the strategies I provide realistic? Yes, but only by optimizing the environment (robust algorithm, low costs, honest broker, proper risk management, and order management). Are the strategies provided only for the sole use of trading? No, it is to stimulate brainstorming and getting more trading ideas as we are all sick of hearing about an oversold RSI as a reason to go short or a resistance being surpassed as a reason to go long. I am trying to introduce a new field called Objective Technical Analysis where we use hard data to judge our techniques rather than rely on outdated classical methods.